With the CPU completed, it is time to think of an I/O to be able to play with the system somewhat more interactively than by plugging EPROM chips in and out and attaching logic probes to data and memory buses. At first, my plan was to build a PS/2 keyboard controller (input) and a rudimentary LCD display (output). Armando Acosta convinced me that it is not a right way. Minicomputers from the era when TTL was a mainstream technology had no keyboards or video. Operators controlled them by front panels with lots of lights and switches or by communicating with the computer using a terminal over a serial port. Keyboards and displays came later, with the arrival of personal computers. Apart from being more historically correct, a serial port will give me more flexibility. I can connect the computer to anything ranging from an old dumb terminal from eBay, to a modern laptop PC. Not to mention that it may be fairly easy to connect the machine to Internet over SLIP protocol, which is one of the ultimate goals of this project.

Serial communication is about converting parallel data (a bus) to a serial representation (1 wire), delimited by control bits (depending on the actual protocol used) and the opposite. I could attempt to build a serial port out of TTL chips or use a readily available UART chip to take care of all the details of parallel-to-serial and serial-to-parallel conversion. Since building a serial port from scratch sound to me like a project of its own, I decided to go for the latter option and use one of the commercial off-the-shelf UARTS. My choice is a 16550 chip, which is still available, fairly inexpensive and comes in a 40-pin DIP package. The 16550 is a successor of 8250 and 16450 UARTS, which were common in early PCs. It is typically used with a MAX232 or similar chip to convert TTL voltage levels of 16550 to RS-232, and from RS-232 to TTL.

As per its datasheet, the 16550D chip (its current revision) has the following features:

- It performs serial-to-parallel (device to CPU) and parallel-to-serial communication (CPU to device) conversions.

- It has a programmable interrupt system to present to the CPU interrupts on occurrences of data transmit, data receive or specific line status.

- It has a 16-byte FIFO buffers on the transmitter and receiver to reduce the load on CPU (by firing interrupts less frequently).

- It adds and deletes asynchronous communication control bits (start, stop and parity) in a programmable notation (like a common 8-N-1 or something more exotic).

- It generates standard RS-232 modem control signals like CTS, RTS, DSR, DTR, RI and DCD.

- It exposes 12 programmer visible read/write registers to control and read the UART’s status.

- It has a programmable baud generator, allowing the setting of any baud rate from 50 to 128000, depending on the programmable divisor setting and a crystal frequency used.

- It is fully TTL compatible.

It seems from the above that 16550 does everything I need on its own. All I have to do is to map it correctly to my CPU’s I/O space with few decoder chips, assign it one of the interrupt numbers, tie D0..D7 to my data bus and give it a try. Additional components I need are: a crystal oscillator, the already mentioned MAX232 voltage converter and of course a DB9 connector.

The problem I may have with 16550 is its timing constraints. The full read/write cycle of the UART is 280ns, which is quite long. I have already been thinking of extending my clock subsystem with extra logic to slow down the clock by half whenever I/O space (slow devices) are accessed by the CPU (/SLOW signal). It may turn out that now is the right moment to implement it. Otherwise, the devices I attach to the CPU become a bottleneck significantly reducing maximum CPU clock frequency.

Memory mapping

I have previously assigned the range 1000h-1FFFh as I/O (devices). Now it is time to use it. I am assuming each device will need not more than 16-bytes of control block, so I am going to divide this space into chunks of this size and dedicate them to the devices. UARTs (I want to have two of them) will occupy first two blocks. As a result, here is how the memory map will look like:

| Range | Description |

| $0000-$001F | interrupt vector |

| $0020-$0FFF | kernel area |

| $1000-$1FFF | I/O area (devices) |

| $1000-$100F | UART0 |

| $1010-$101F | UART1 |

| $2000-$2FFF | system registers |

| $2000 | REG_CODEPAGE0 |

| $2200 | REG_CODEPAGE1 |

| $2400 | REG_DATAPAGE0 |

| $2600 | REG_DATAPAGE1 |

| $3000-$FFFF | general purpose RAM |

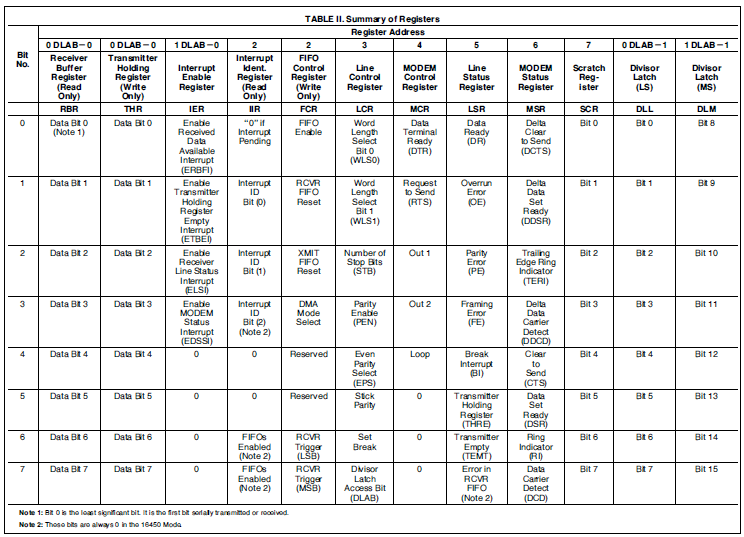

Each UART will occupy only the first 8 bytes of the assigned space (the other 8-byte block will mirror the same physical registers). The trick with 12 registers mapping only to 8-byte address space is that some write-only registers share the same address with other read-only registers. Here is an extract from National Semiconductors’ 16550D datasheet showing what registers the device exposes.

IRQ assignment

My CPU has 8 priority driven interrupt input lines, IRQ0 being the highest priority interrupt. I will assign both UARTs, UART0 and UART1 to IRQ channels #2 and #3, respectively, leaving highest priority channels for something else I may want to add in the future (like timer interrupt from RTC or IDE controller interrupt). For the sake of documenting things, here is how my IRQ assignment map will look like:

| Channel | Device |

| IRQ0 (highest priority) | unused |

| IRQ1 | unused |

| IRQ2 | UART0 |

| IRQ3 | UART1 |

| IRQ4 | unused |

| IRQ5 | unused |

| IRQ6 | unused |

| IRQ7 (lowest priority) | unused |

The drawback of this approach is that UARTs are not equal. UART0 has higher priority IRQ assignment than UART1. I don’t see a real problem with that at this point, so I will not add any multiplexing on the IRQ lines of both 16550s and leave them independent, as shown above.

Next steps

Now it is time to run my CadSoft Eagle again, prepare the initial revision of schematics and start wire wrapping. Both UARTs will occupy one euro size (100x160mm) prototyping card, which I am going to wire wrap using a slightly different technique. I will solder pins to the card, instead of using expensive wire wrapping board. I hope to be able to report on progress soon, so stay tuned.

Glad you’re back. I hope you enjoyed your vacation.

I haven’t realized the issue with slow response from the 16C550. I’m making further plans for employing the same chip. My clock period is 250ns but, according to time-diagrams in the Z80 manual, it will take two periods (500ns) from read request to actual reading so I should be safe. Nevertheless I don’t like to operate so closed to the limit so thank you for raising the alert.

You mentioned a curious technique to address the problem: to double the clock period when in presence of slow devices. An alternative is the one employed by the Z80: a “wait” input signal. When asserted, the CPU simply holds in the middle of the cycle so all signals get frozen until the device finished. Of course, it is the job of the slow device to provide the “wait” signal when it feels himself being selected. Not sure at this moment what of the two techniques would work better.

And now, a question: How do you share two registers with exactly the same address? Honestly, I can’t figure it out… And, another question: What is the purpose of mirroring registers?

Let me share another thought with you (and another question). It is about networking.

My goal with LC-81 is a little different from yours. My intention is to use the machine more as a (programmable) tool in my Engineering Shop and/or (at the most) to run math-oriented demo programs just to give the impression that the machine is “useful for something”. Therefore, networking is not in my horizon.

However, it occurred to me once that, if I ever wanted to make LC-81 to communicate with my home network, it would be with the sole purpose of transferring files… which sounds pretty much as FTP, doesn’t it?

So I started to consider the Kermit protocol instead of SLIP. Software exists for receiving Kermit messages over a serial line and “translate it into something else”, but the fun part would be to write such code from scratch so it does only what’s required and nothing else.

In my case I was thinking on writing a “Kermit Gateway” which, running at a Linux machine, translate Kermit packages coming thought the serial port into FTP commands. This “Kermit Gateway” will act as a FTP client from the network viewpoint, and as a Kermit server from the serial line viewpoint.

This would allows me to transfer files over the serial line (using Kermit protocol) being the Kermit code in LC-81, the “Kermit Gateway” in the Linux PC and all the TCP/IP stack already available from Linux itself.

The question is: What do you think?

You’re right about the “wait” signal. It is another (and commonly used) technique to satisfy slow devices timing requirements. What I don’t like about it is that the affected device needs by itself to assert and deassert the signal. While it is easy to assert the signal (e.g. by setting a flip flop when the device is selected), it may be somewhat tricky to deassert it (how do you know the device has actually finished?). The approach with “slow” signal controlled by CPU is less efficient (you always devote two full cycles to process device read/write, instead of using only as much time as you need) but obviously easier to implement in hardware (and it works in the same manner for any device). Nevertheless, good point – if I really encounter timing issues, I will consider this option, too.

Sharing the same address for two registers is easy when they are read/write, there is no magic. Imagine you have a signal “R/W” that is logic 0 when you want to read from the address and logic 1 when you write to it. Now, when you decode the address, use this signal as an extra address bit. Your address decoding circuit will point to either of the two. That’s exactly what 16550 does. Same applies to any other scenario when you have one address, two registers, and a dedicated signal to distinguish the operation (and, as a consequence, decide on the selected register). With two such extra address bits you would have four registers at the same address, and so on.

Mirroring registers is not done on purpose in my case. I am dedicating 16 bytes for each device block in memory. I need four lowest bits to properly address locations within the block (with remaining 17 bits of address bus fixed to point to a specific 16-byte block in memory). Now, for 16550 I need only 8 address locations, so I am using only three lowest bits, disregarding the fourth one. As a result, regardless if I reference lower 8 bytes or higher 8 bytes of the device block, the hardware points to the same registers of the UART. Of course I could attempt to map devices in less uniform way use only as many addresses are required for each device, but this would complicate address decoding.

Now, regarding your networking question. I am not sure if it is even correct to consider “Kermit instead of SLIP”, they are not comparable. If you recall seven layers of the OSI networking model, then for decent communication you need at least the physical layer (layer 1) and the protocol (layer 7). In our case, the physical layer is RS-232, and the application (protocol) layer would be Kermit (or FTP). Whatever occurs in middle layers aims at providing reliable communication (framing, routing, integrity, error handling and recovery, encryption/decryption, adding statefullness and alike). For the application you described, of course you don’t need all of those and writing “something” from scratch IMO is a good solution if that solution is to do what it is required and nothing else. However, that “something” would be at least part of the Kermit protocol (layer 7) and some code to send and receive data (layers 6-2) over the RS-232 (layer 1). The 16550 already gives you some capabilities of layers (6-2) by implementing serial communication (which is a higher level notion that RS-232) and FIFO buffers, but you need some extra code anyways. This code may again be something custom to do “the very job” or a TCP/IP stack (layers 4-3) over SLIP (layer 2). If I ever get to the point of implementing networking, I think I will pursuit a more generic solution with support for TCP/IP.

I don’t really know much of Kermit so I am not sure how challenging it would be to bridge Kermit and FTP, but your plan of having Kermit in LC-81 and a gateway outside sounds reasonable.

Your technique of saving address space by letting RD/WR to participate in the address decoding is definitely one that I will copy when I get into that.

As per dealing with slow devices, I think that that is less critical nowadays. In the old days ferrite core memory, for example, used to have an access time of 500 microseconds… that’s slow! –Nevertheless the issue is still interesting and not entirely unlikely for us.

I think the peripheral don’t actually have to “know” when it has finished; instead it will activate the wait signal and keep it active for a fixed period of time. Say for instance it requires a 20 microseconds RD signal; the peripheral will simply react to the RD signal by producing a 20 microseconds wait pulse (possibly by counting clock cycles) that makes the CPU to hold the RD signal that long.

You may be right in that placing exactly the same mechanism in the CPU could simplify peripheral’s circuitry… but only at the cost of taxing all peripherals equally by the slowest one. And what if later on you find your self working with an even slower device for which the CPU delay you’ve already set, is not slow enough?

Definitely, placing the “holder” in the peripherals (instead of the CPU) will allows you to adjust the “hold time” in a per peripheral basis which should result in better overall performance… at the cost, of course, of making things a little more complicated in the peripheral side. So it is a matter of design choice (as usual in Engineering).

Kermit and SLIP are not comparable, indeed, because they serve different purposes. What is comparable (indeed) is the choice to make between one or the other (different) purposes.

In this case, the purpose is not “to network LC-81” throughout the serial port, to which end SLIP would be the natural choice. Instead, the purpose is to provide a way for transferring files throughout the serial port: It is a point-to-point communication, not a network proper. And here is where Kermit protocol came into scene… since 1981.

Nevertheless, I don’t think I will write such a thing. Not sure if I am capable of.